Predictable behavior in an AI agent requires that its operational logic be explicitly defined. When all instructions are bundled into a single prompt, the sequence of actions is left to the model's interpretation. This lack of direct control is not a suitable method for applications that must perform consistently. ElevenLabs Agent Workflows provide the necessary structure, using a visual editor to map out the exact logic for every conversational step.

This visual method of building Voice AI agents offers several advantages:

- Decomposition of Tasks: Complex problems can be broken down into smaller, manageable tasks, each handled by a specialized Subagent with its own specific prompt, tools, and knowledge base.

- Improved Response Quality: By scoping the context for each Subagent, the precision of responses can be improved, and access to sensitive information can be limited.

- Cost and Latency Optimization: For instance, a lightweight model could handle initial request classification, while a more powerful model could be used for complex reasoning, thereby optimizing for cost and speed.

- Explicit Control and Guardrails: Developers can visually map the flow of conversation, declare decision points, and control handoffs between Subagents or to human agents. This makes it possible to embed business rules, validations, and escalation paths directly into the workflow.

This article first breaks down the individual components—from Subagents to Dispatch Tools—and explains the logic that controls the conversation's direction. Following this technical breakdown, you will find a practical guide demonstrating the assembly of these components into a functional agent capable of handling a real-world customer service scenario.

Develop Voice AI Agents For Any Web Application

Create intelligent voice-powered agents that can listen, understand, and interact with any web application. Deploy conversational AI that enhances user experience through natural speech interfaces.

The Building Blocks of Conversation: Understanding the Node Types

Workflows are constructed from various types of nodes, each with a specific function in guiding the conversation. Think of these nodes as the decision points and action-takers within your agent's logic.

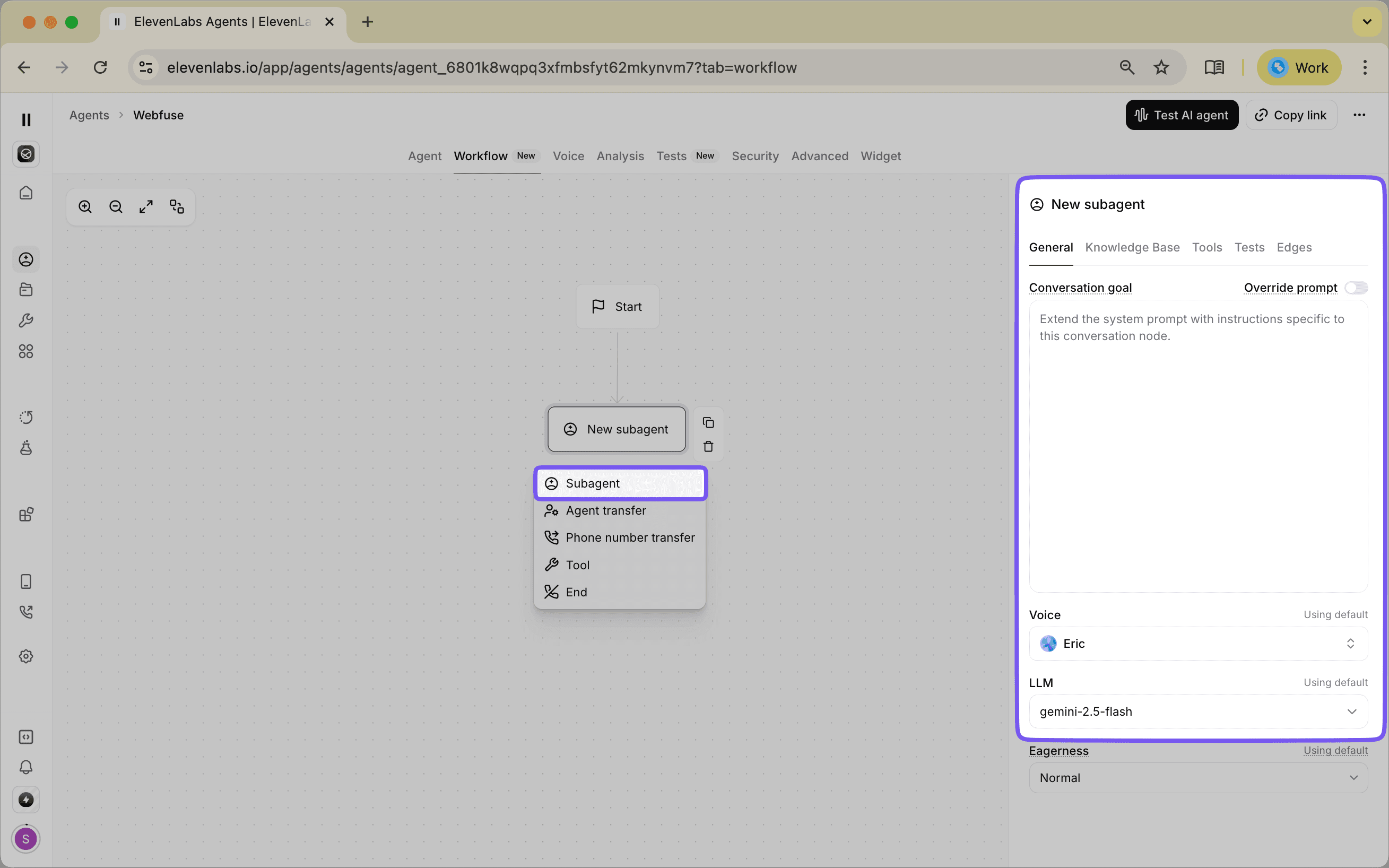

Subagent Nodes

Subagent modes are where you can modify the agent’s behavior at a specific moment in the conversation. Instead of a single, rigid personality, you can instruct the agency to adopt different characteristics as needed. For example, you could use a quick, lightweight language model for initial greetings and then switch to a more powerful model for complex problem-solving.

With a Subagent node, you can adjust:

- System Prompt: Change or add to the agent's core instructions.

- LLM Selection: Swap between different language models to balance speed and accuracy.

- Voice Configuration: Alter the agent's voice, speed, or tone.

- Tools and Knowledge Base: Provide the agent with specific tools or information relevant only to that stage of the conversation.

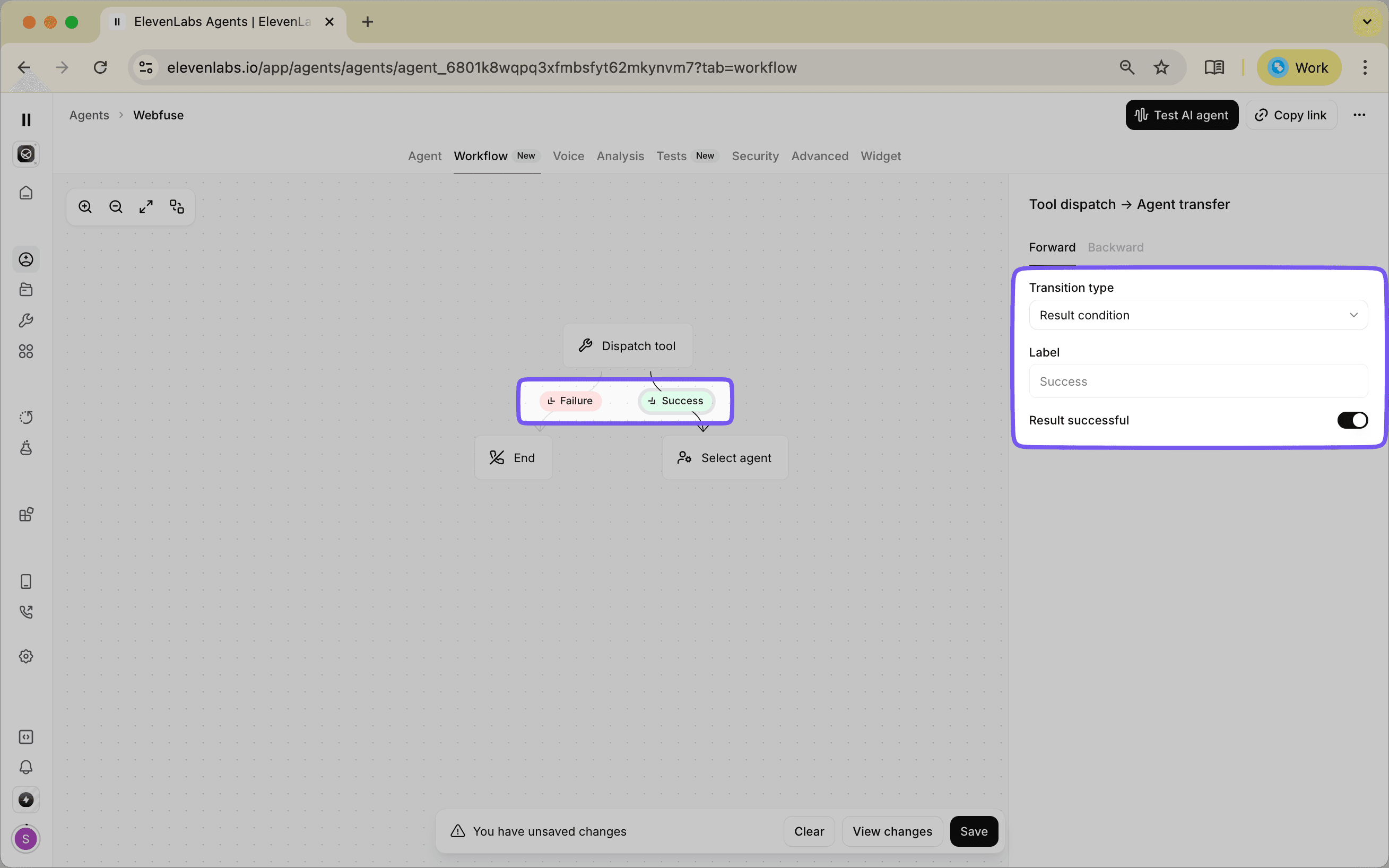

Dispatch Tool Node

While a Subagent might have access to several tools, the Dispatch Tool Node guarantees that a specific tool is used at a certain point in the flow. This provides a level of control not available in standard tool use, where the language model decides if a tool is necessary. This is important for actions that must happen, such as verifying a user's identity or processing a payment.

A key feature of this node is its ability to direct the conversation based on the outcome of the tool.

- On Success: If the tool executes correctly (e.g., a payment is processed), the workflow follows a "success" path.

- On Failure: If the tool returns an error (e.g., a credit card is declined), the conversation is routed down a separate "failure" path, allowing for specific error handling.

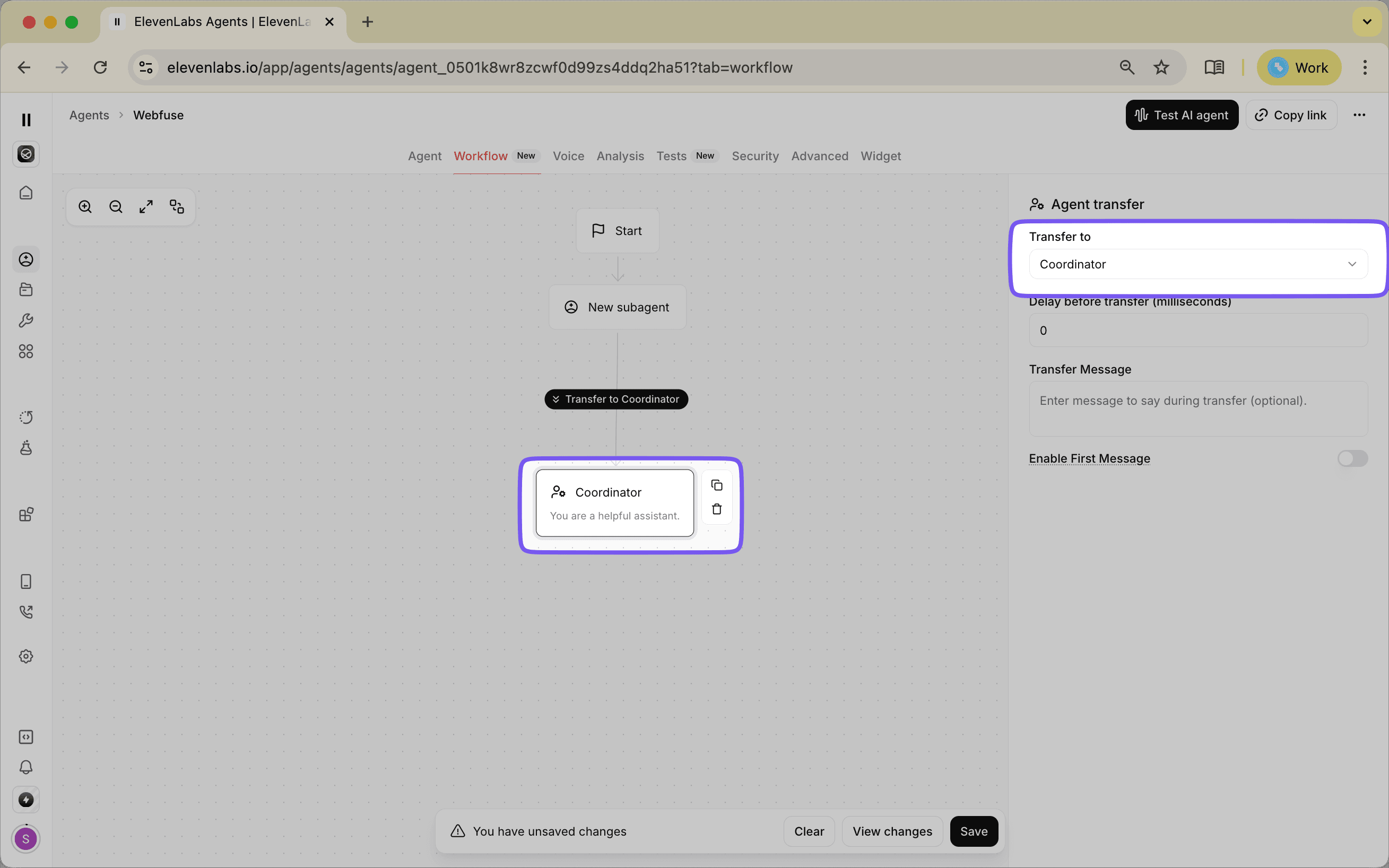

Agent Transfer Node

This node handles a transfer of the conversation from one AI agent to another, which allows for the creation of modular and scalable support systems. For instance, a general "Coordinator" agent can handle initial user queries and then delegate to a more specialized agent.

Common use cases include transferring from a general agent to agents specializing in:

- Billing and account issues

- Technical support

- Scheduling or availability inquiries

This process maintains the conversation's context, ensuring the user does not need to repeat information after the transfer.

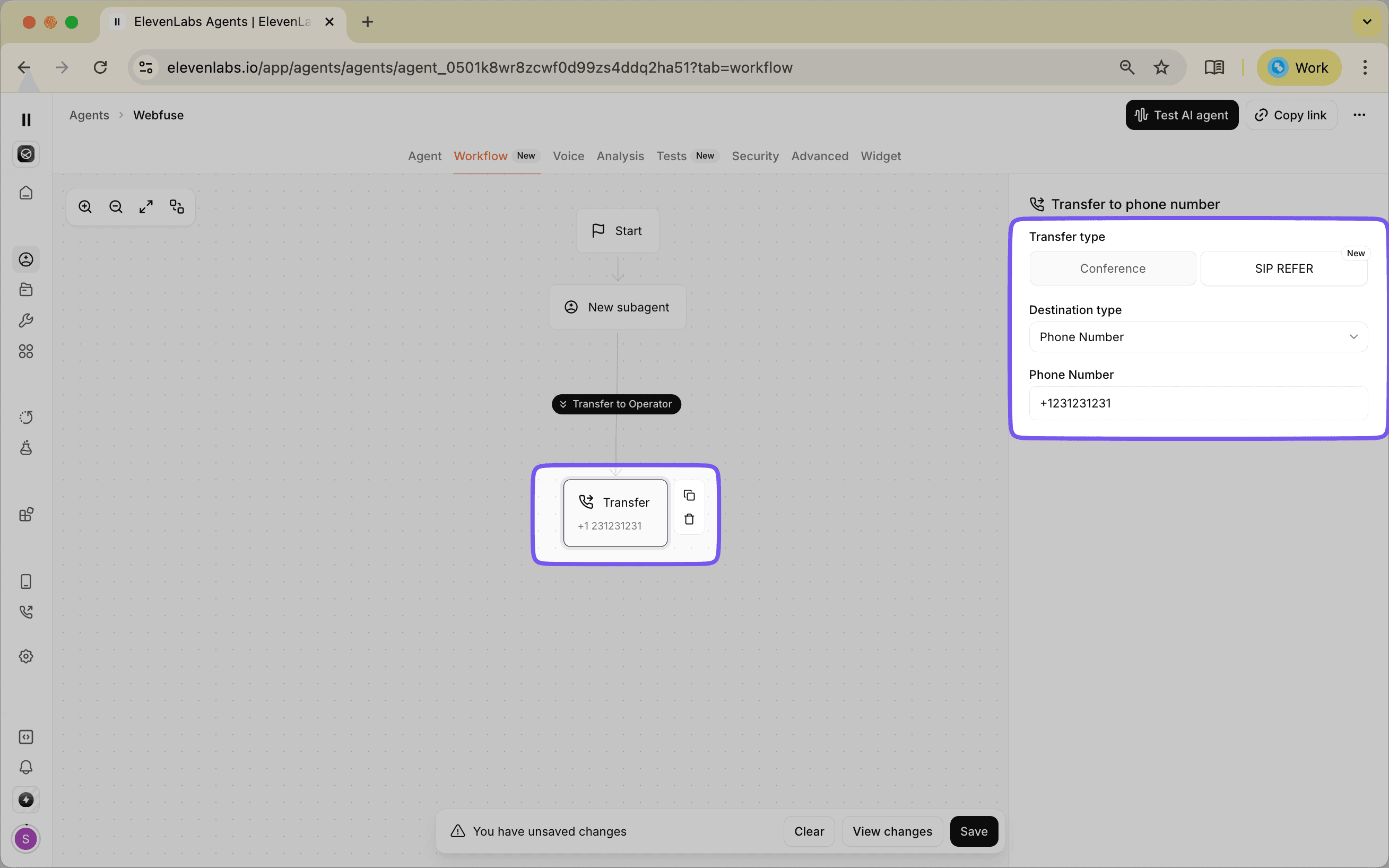

Transfer to Number Node

When a problem requires human judgment or a user explicitly asks to speak with a person, the Transfer to Number Node facilitates a handoff to a human agent via a phone call or SIP URI. This acts as an important escalation path when the AI's capabilities are exceeded.

The node is configured to manage the entire handoff process:

- It can play a custom message to the user while they wait for the transfer.

- It can provide a summary of the conversation to the human agent who receives the call, giving them immediate context.

- It supports different transfer types, like adding the human to a conference call with the user.

End Node: Gracefully Concluding the Conversation

The End Node terminates the conversation flow in a controlled manner. Every complete conversational path should lead to an End Node to ensure the interaction concludes politely and appropriately once the user's query has been resolved or the intended action is complete. This prevents the conversation from being left in an ambiguous or open-ended state.

Directing the Conversational Journey: Edges and Flow Control

If nodes are the destinations in your conversational map, edges are the roads that connect them. In the ElevenLabs Agent Workflows editor, these are the visual lines you draw between nodes. They represent the possible paths the conversation can take and dictate the logic that moves a user from one step to the next.

The flow of the conversation is directed by specific rules and conditions applied to these edges.

- Intent-Based Routing: The primary method for directing flow is based on the user's intent. The AI model analyzes the user's input and directs the conversation along the edge that matches the identified purpose. For example, if a user says, "I want to check my balance," the workflow follows the edge labeled "check_balance" to a node designed for that task.

- Conditional Logic: You can set up specific conditions on an edge that must be met for the conversation to proceed along that path. This allows for more granular control. A condition could be based on information gathered earlier in the conversation, such as the user's account type or a previous action they took.

- Tool-Based Outcomes: As seen with the Dispatch Tool Node, the success or failure of a tool's operation can be a deciding factor. An edge connected to the "On Success" output of a node will be followed if the tool works, while an "On Failure" edge provides an alternative path for handling errors.

- Default Paths: It is good practice to establish a default or "fallback" edge from a node. This path is taken if the user's input doesn't match any of the other defined intents or conditions. This ensures the conversation doesn't come to a dead end and can handle unexpected queries gracefully, perhaps by asking for clarification or offering a menu of options.

By visually connecting nodes and defining the rules on the edges between them, you create an explicit and understandable map of your agent's logic. This makes it much simpler to build, debug, and modify complex, multi-turn conversations compared to managing deeply nested code.

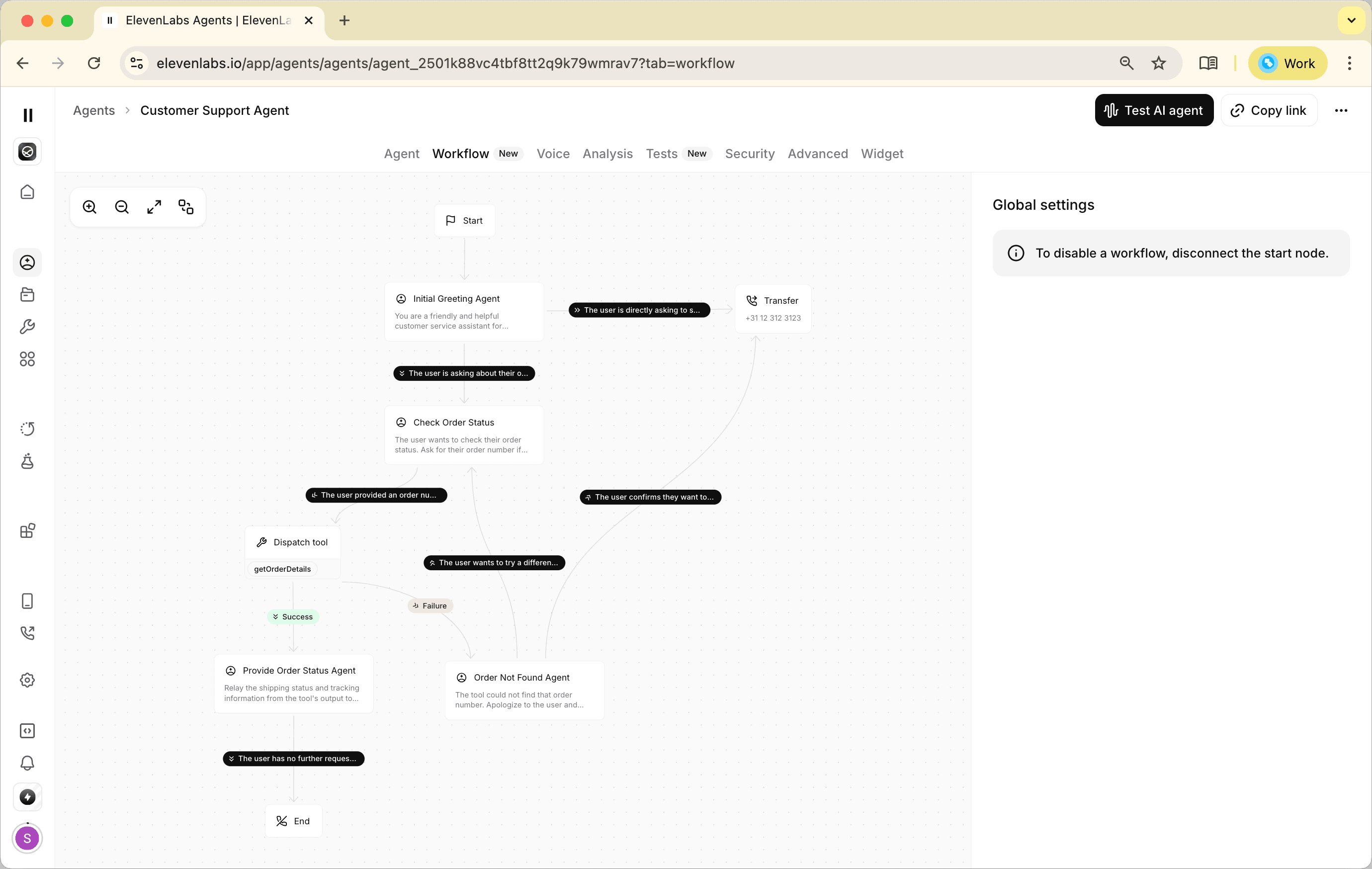

A Practical Guide: Building a Customer Service Agent Workflow

To illustrate the application of these concepts, let's walk through the creation of a workflow for a common customer service scenario. This agent will handle basic inquiries for an online retail store, "ShopExpress," with the ability to check an order's status or direct billing questions to a human agent.

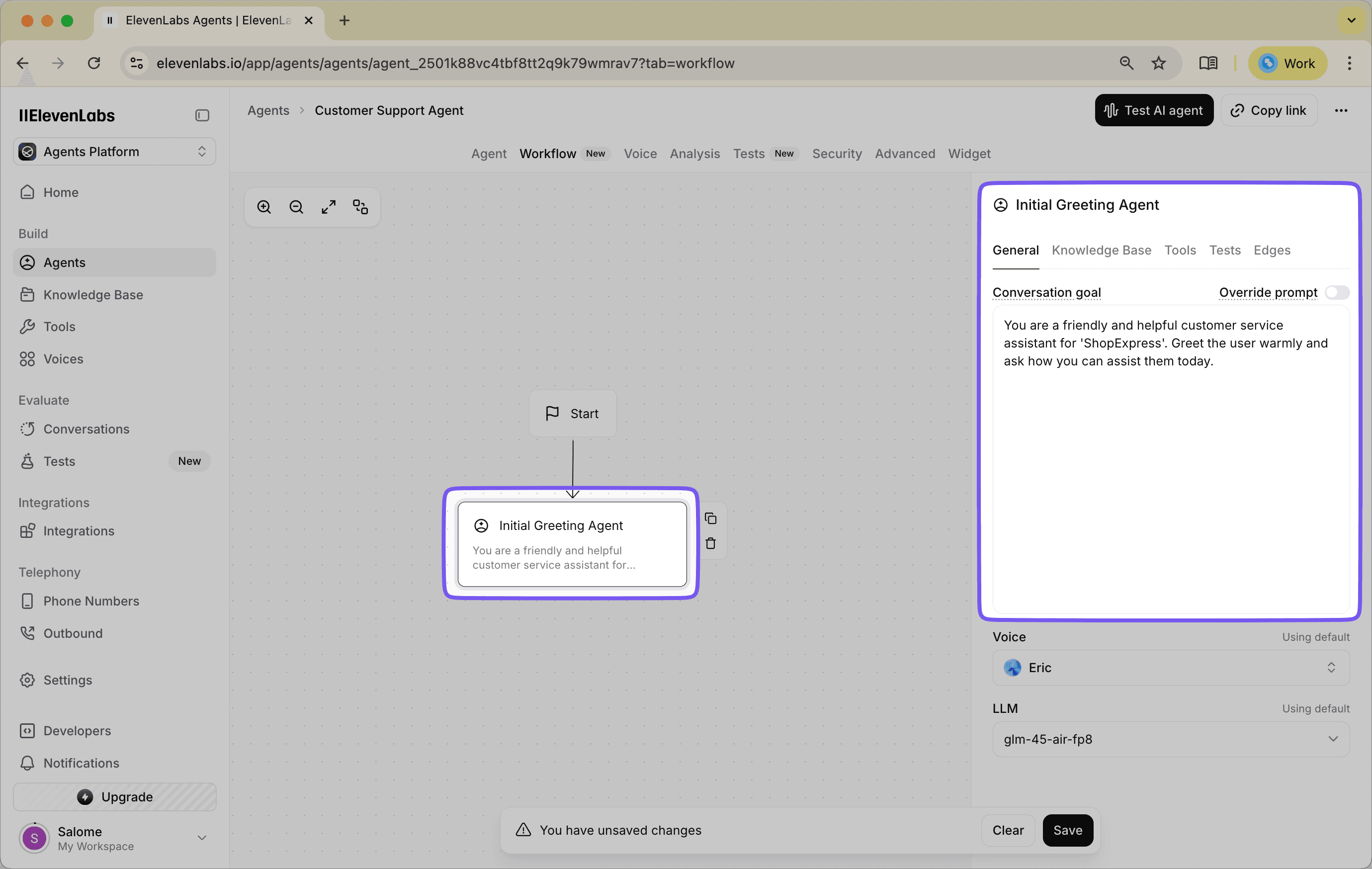

Step 1: The Initial Greeting

Every workflow begins with the Start Node. From here, we will add our first Subagent to greet the user. Click and drag from the output of the Start Node to create a new Subagent Node.

- Title: Give this node a clear name, such as

Initial Greeting Agent. - Prompt: In the node's configuration panel, enter a direct and welcoming prompt.

- Prompt Example:

"You are a friendly and helpful customer service assistant for 'ShopExpress'. Greet the user warmly and ask how you can assist them today."

- Prompt Example:

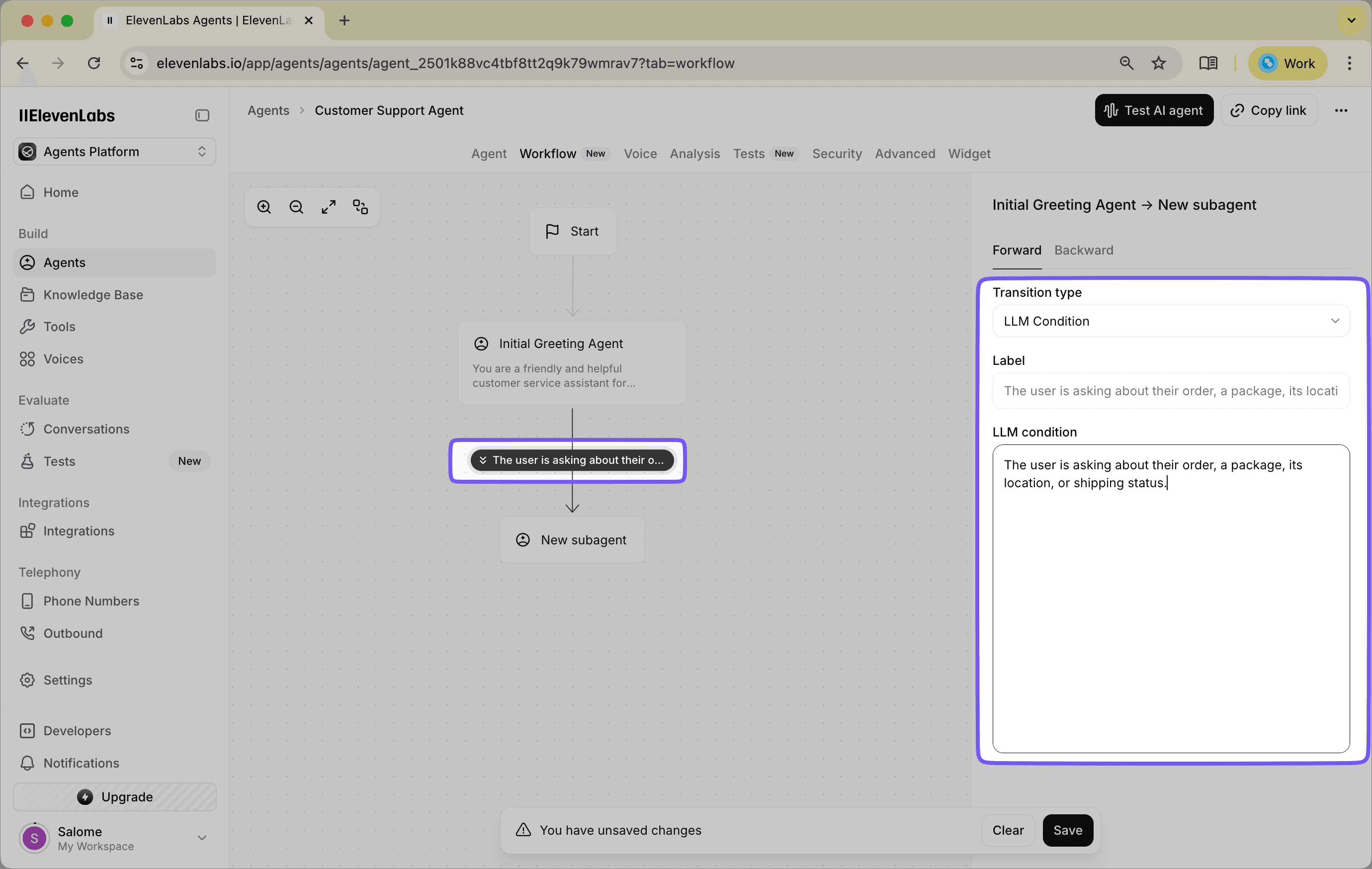

Step 2: Branching the Conversation with Conditions

From the Initial Greeting Agent, we will create different paths for the conversation to follow based on the user's request. To do this, you add new nodes from the greeting agent, which automatically creates a path between them. We will then configure the conditions on each path.

Path 1: Check Order Status

- Add a new Subagent Node from the

Initial Greeting Agent. A path will appear connecting the two. - Click the "Configure Condition" button on this new path. A sidebar will open.

- Set the Transition type to

LLM Condition. - In the LLM Condition text box, describe the user's intent.

- LLM Condition Example:

"The user is asking about their order, a package, its location, or shipping status."

- LLM Condition Example:

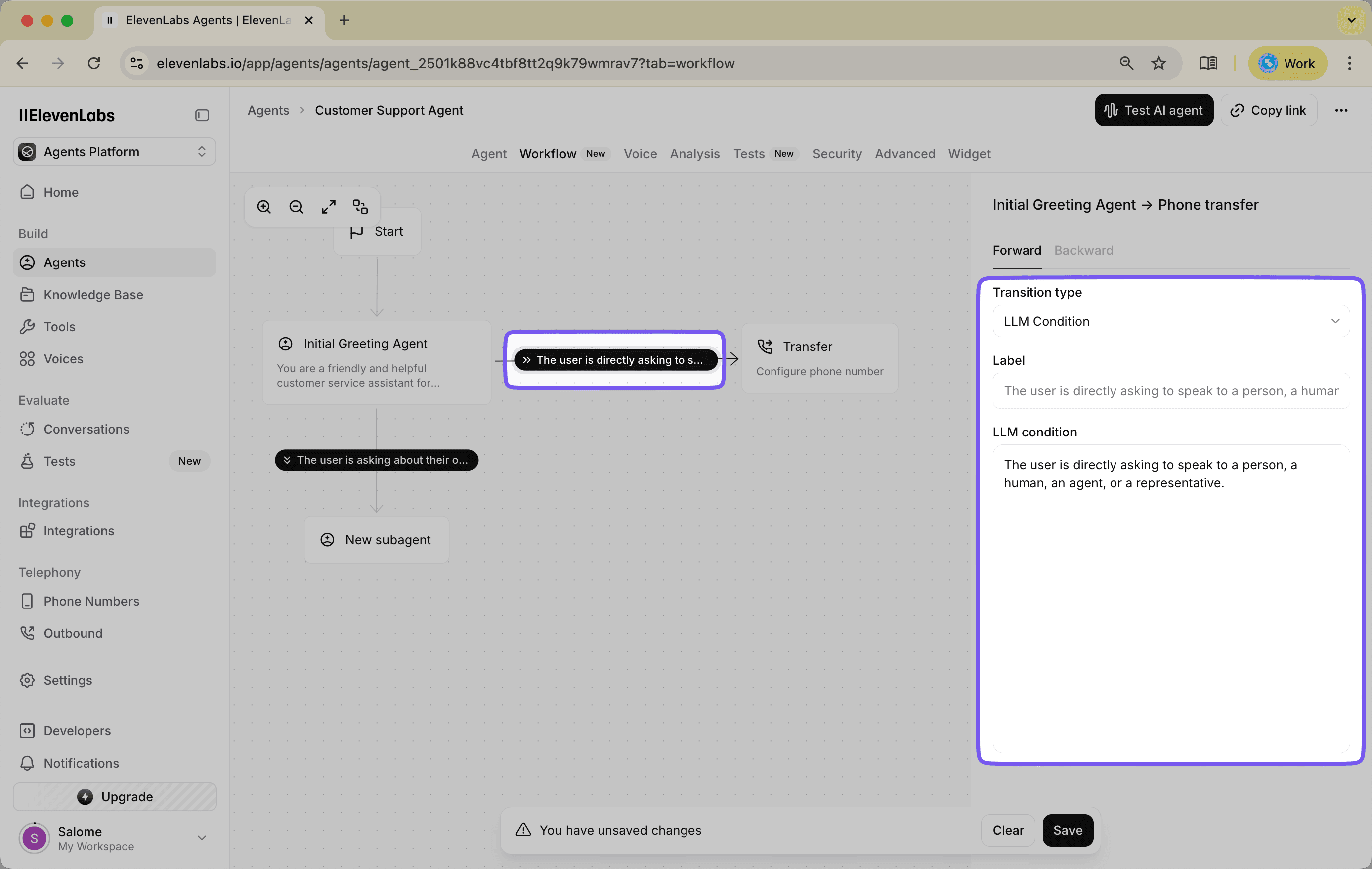

Path 2: Speak to a Human

- This time, add a Transfer to Number Node from the

Initial Greeting Agent. - Click "Configure Condition" on this third path.

- Set the Transition type to

LLM Condition. - Write the condition for an explicit request for human help.

- LLM Condition Example:

"The user is directly asking to speak to a person, a human, an agent, or a representative."

- LLM Condition Example:

The system will now analyze the user's first response and direct the conversation down the path whose condition is met.

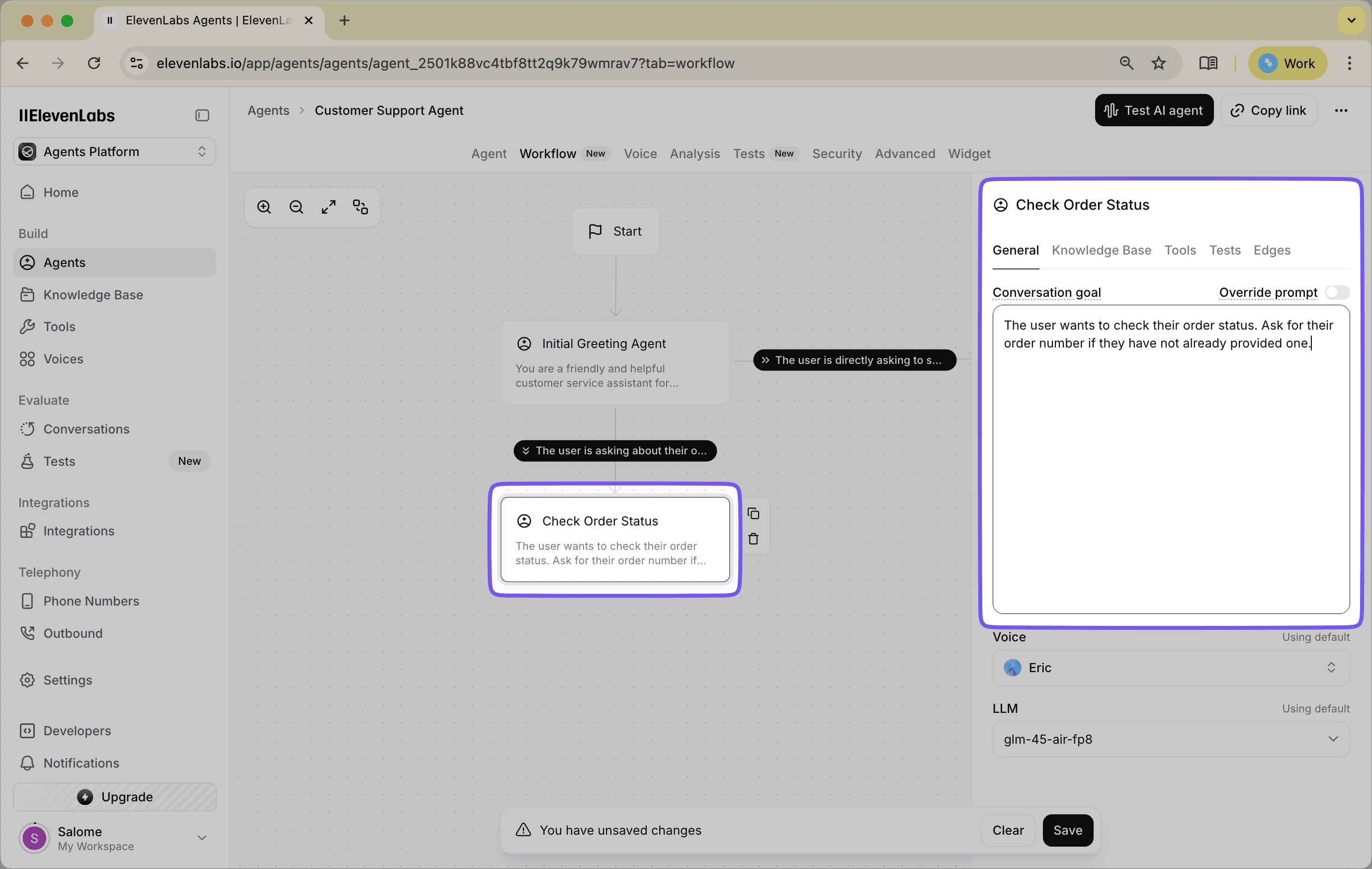

Step 3: Developing the Order Status Branch

Let's follow the Check Order Status path. The Subagent on this path needs to collect information from the user.

Configure its prompt to focus on gathering the necessary details. Prompt Example: "The user wants to check their order status. Ask for their order number if they have not already provided one."

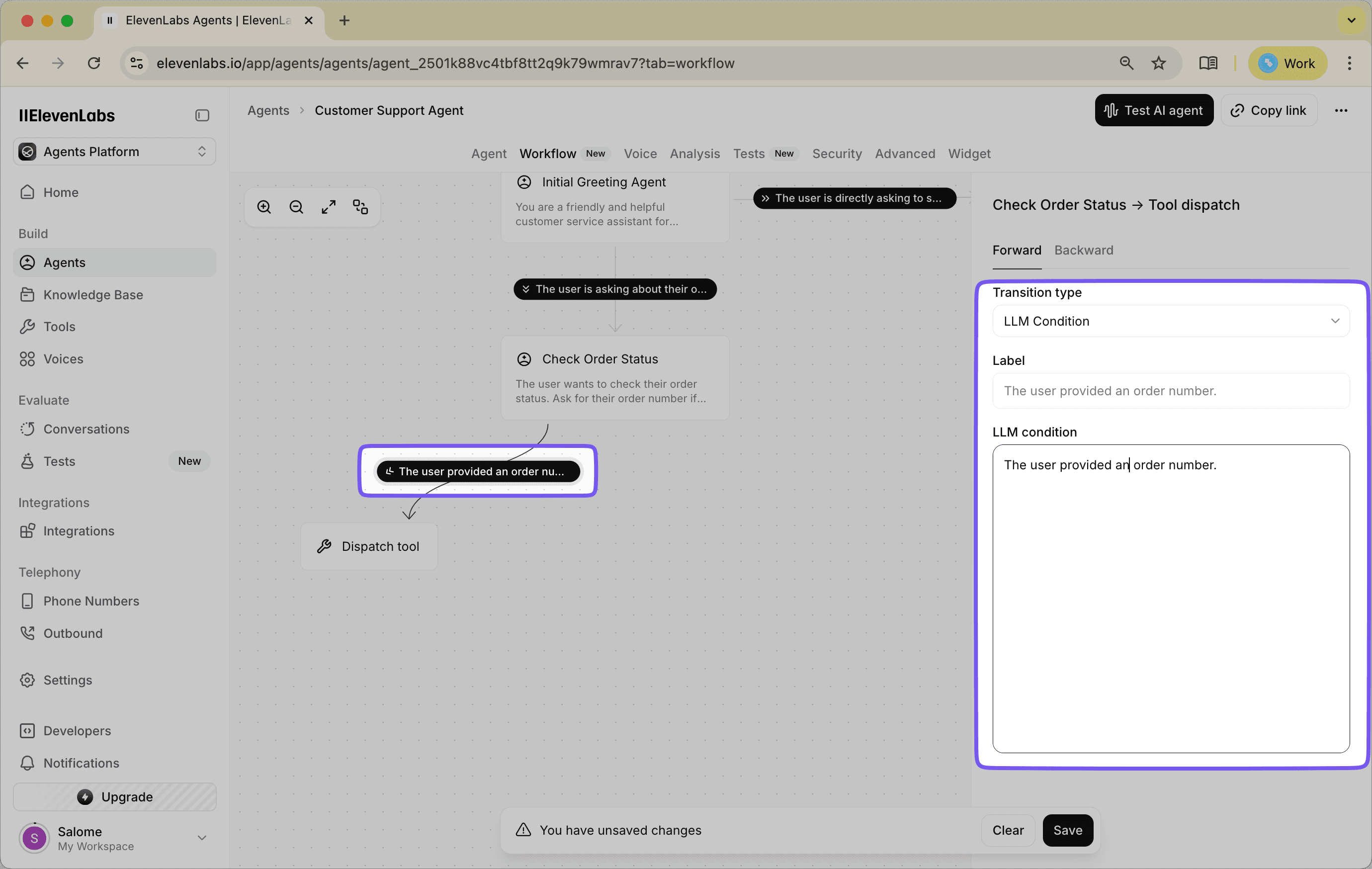

Once the agent has the order number, the workflow should use a tool to look it up.

- From the

Check Order Statusagent, add a Dispatch Tool Node. - Click "Configure Condition" on this third path.

- Set the Transition type to

LLM Condition. - Write the condition for an explicit request for human help.

- LLM Condition Example:

"The user provided an order number."

- LLM Condition Example:

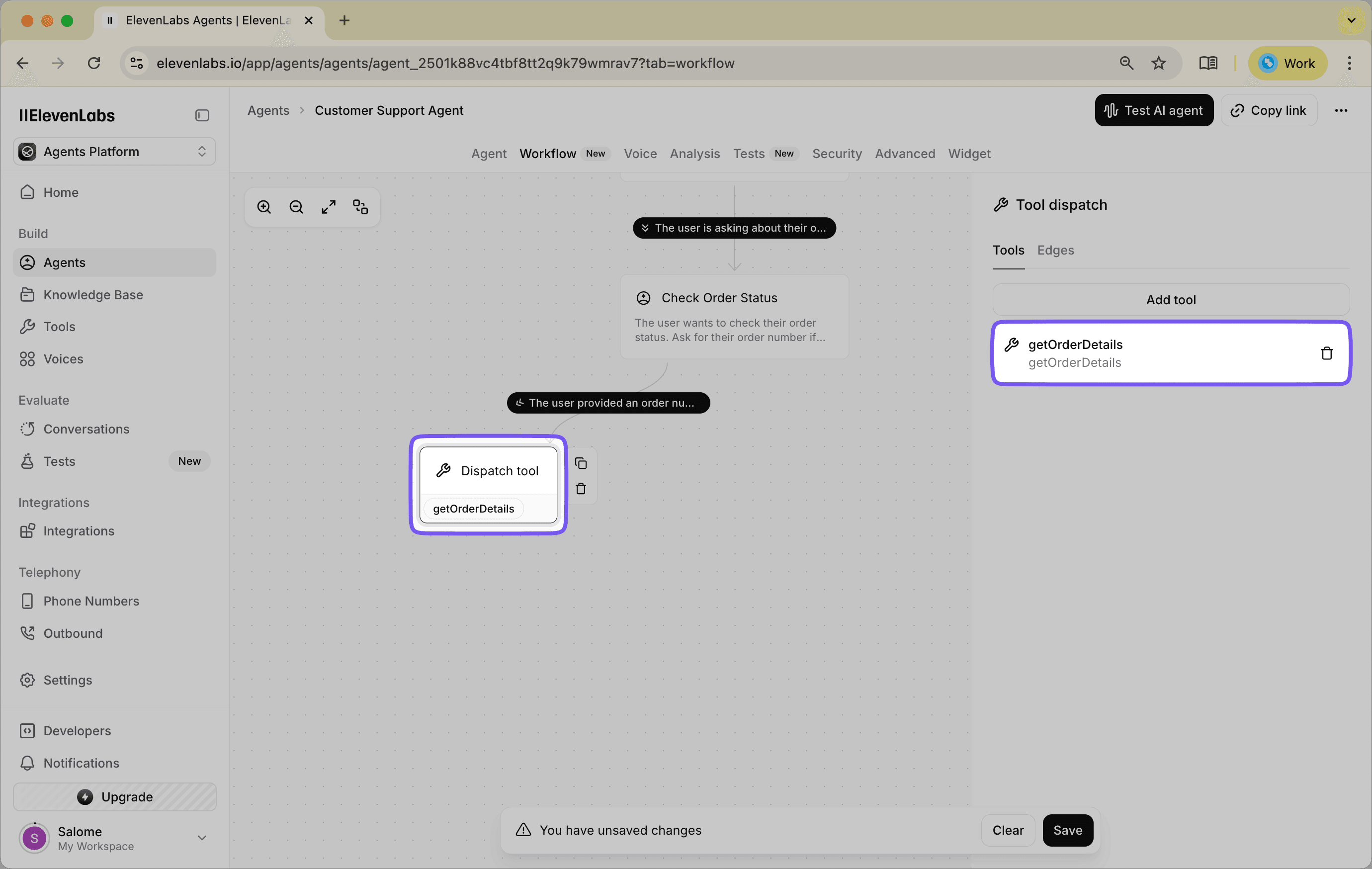

The Dispatch Tool Node on this path needs to call your API to retrieve all relevant information. For this to work, you would build a custom tool that integrates with your company's backend system. As an example for this guide, we will assume you have created a tool and named it getOrderDetails. This tool would be set up to take an order number as an input.

Step 4: Managing Tool Success and Failure

The Dispatch Tool Node needs two separate paths for when the tool succeeds or fails.

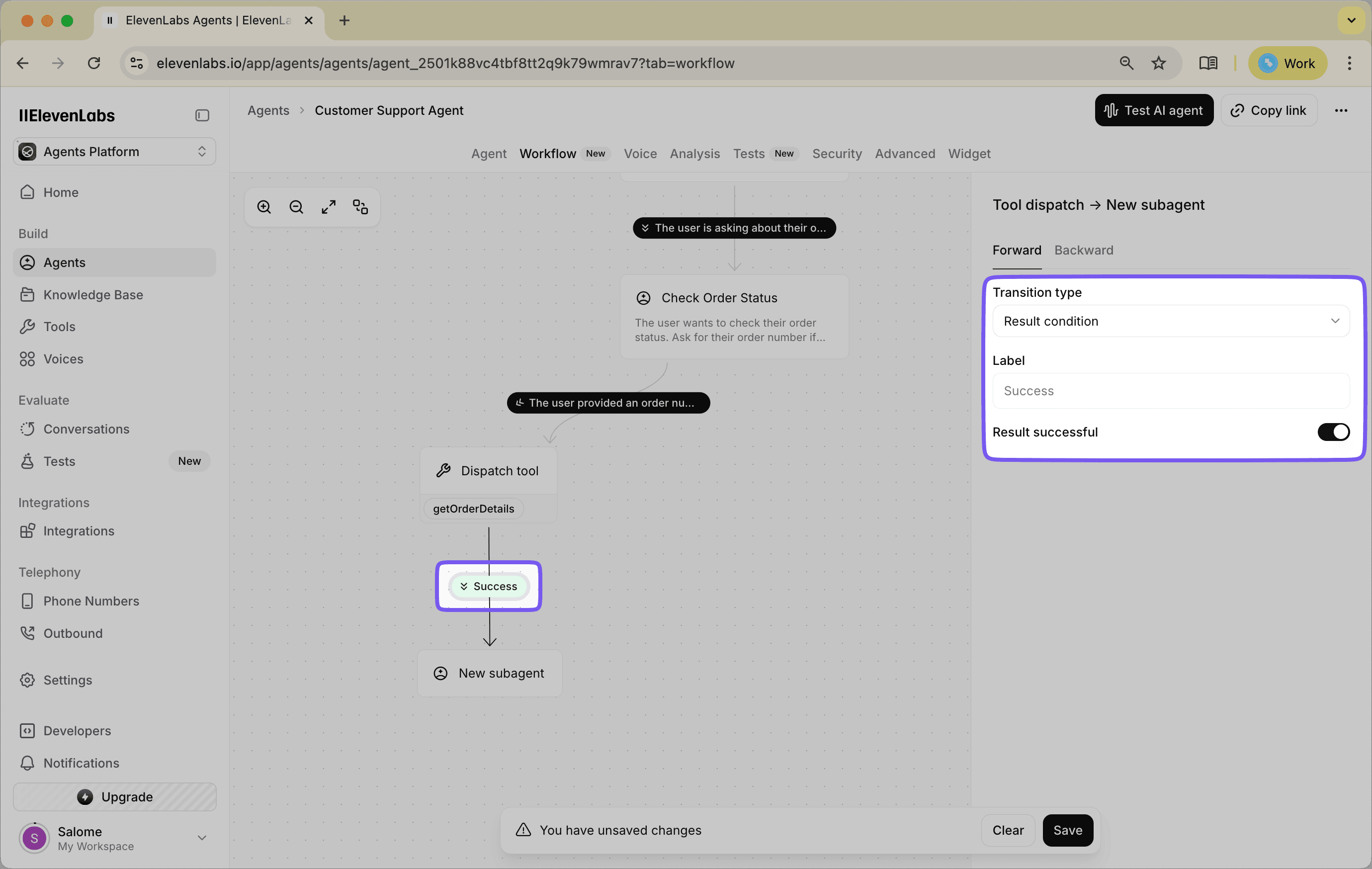

The Success Path

- Add a new Subagent Node from the Dispatch Tool Node.

- Click "Configure Condition" on the path.

- Set the Transition type to

Result condition. - Ensure the Result successful toggle is enabled.

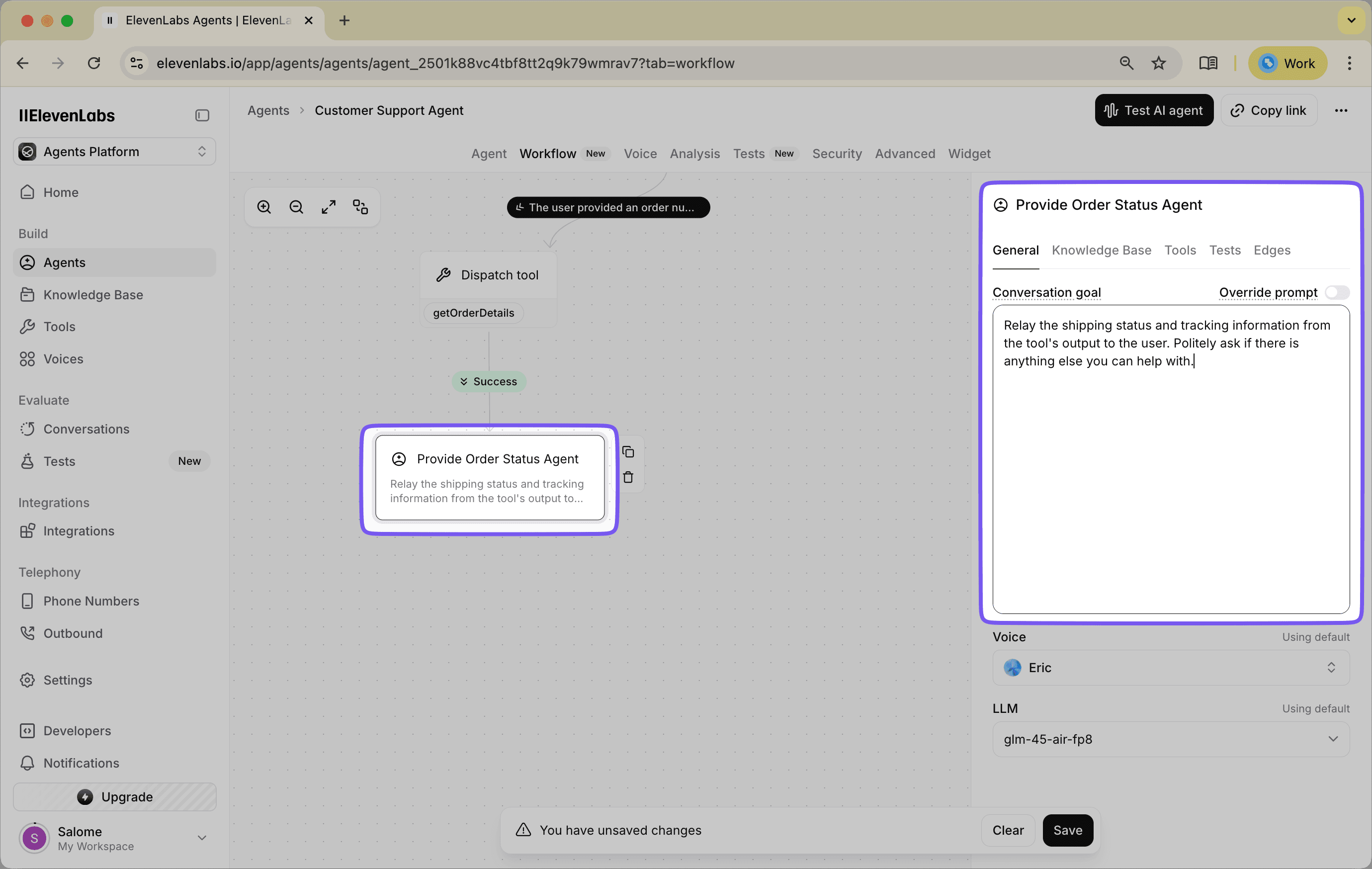

This Subagent will deliver the good news. Select the newly created subagent and fill in the following details:

- Title:

Provide Order Status Agent - Prompt Example:

"Relay the shipping status and tracking information from the tool's output to the user. Politely ask if there is anything else you can help with."

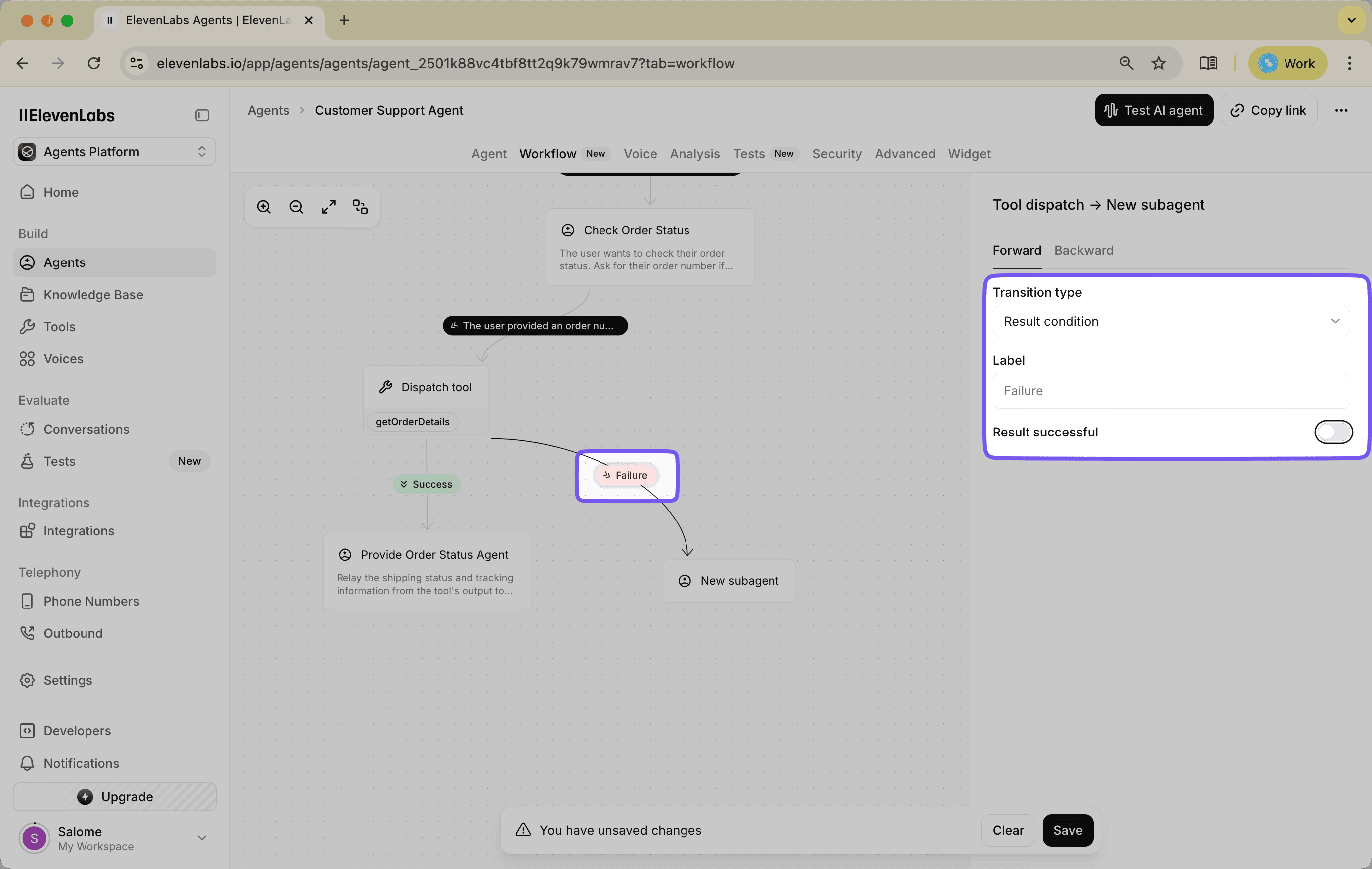

The Failure Path

- Add a second Subagent Node from the.

- Click "Configure Condition" on this new path.

- Set the Transition type to

Result condition. - This time, make sure the Result successful toggle is disabled.

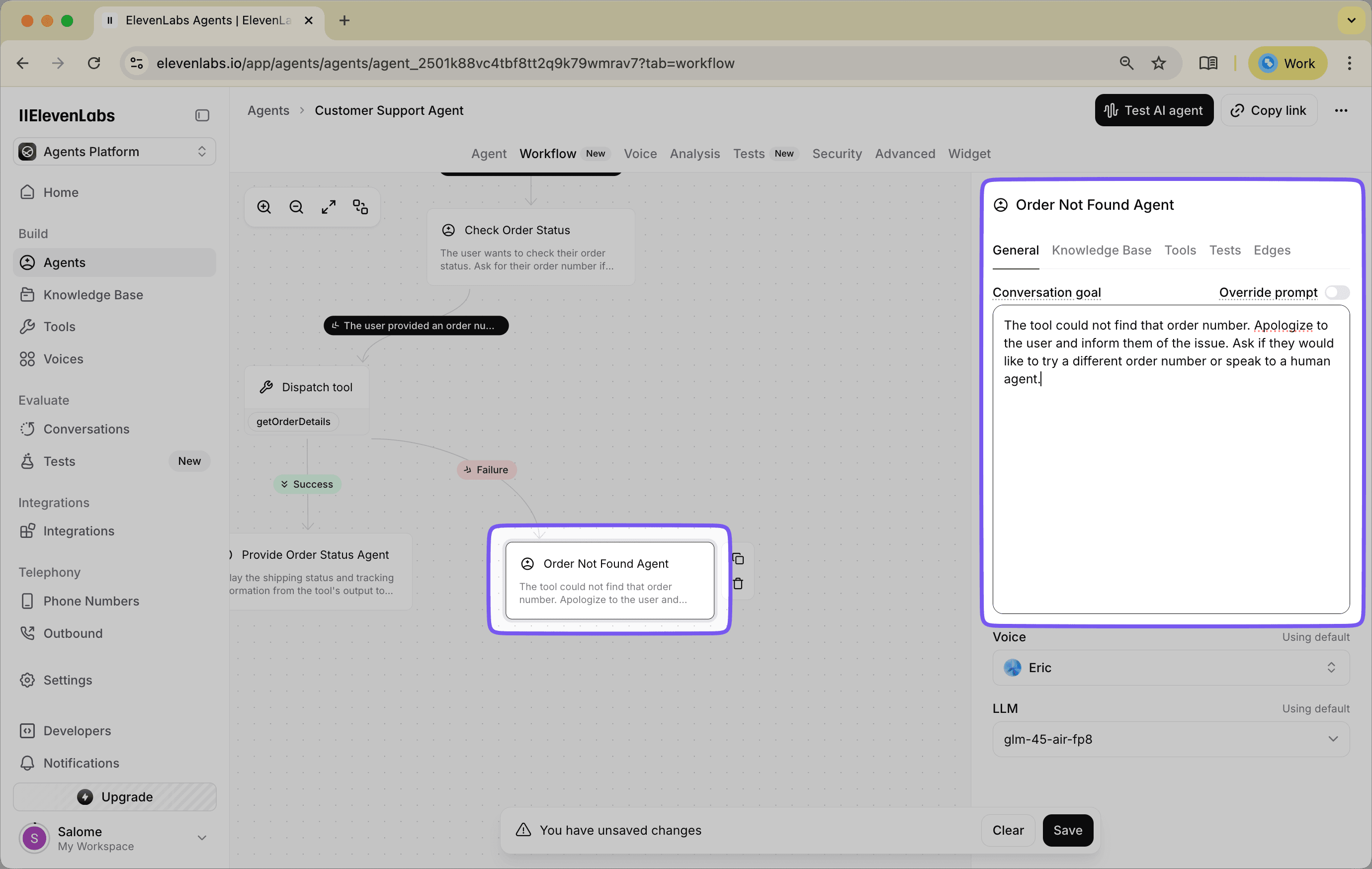

This Subagent will handle the error.

- Title:

Order Not Found Agent - Prompt Example:

"The tool could not find that order number. Apologize to the user and inform them of the issue. Ask if they would like to try a different order number or speak to a human agent."

Step 5: Creating Loops and Endpoints

Now we will connect the final paths. This involves creating a loop for users who want to try another order number and defining the conditions for gracefully ending the conversation.

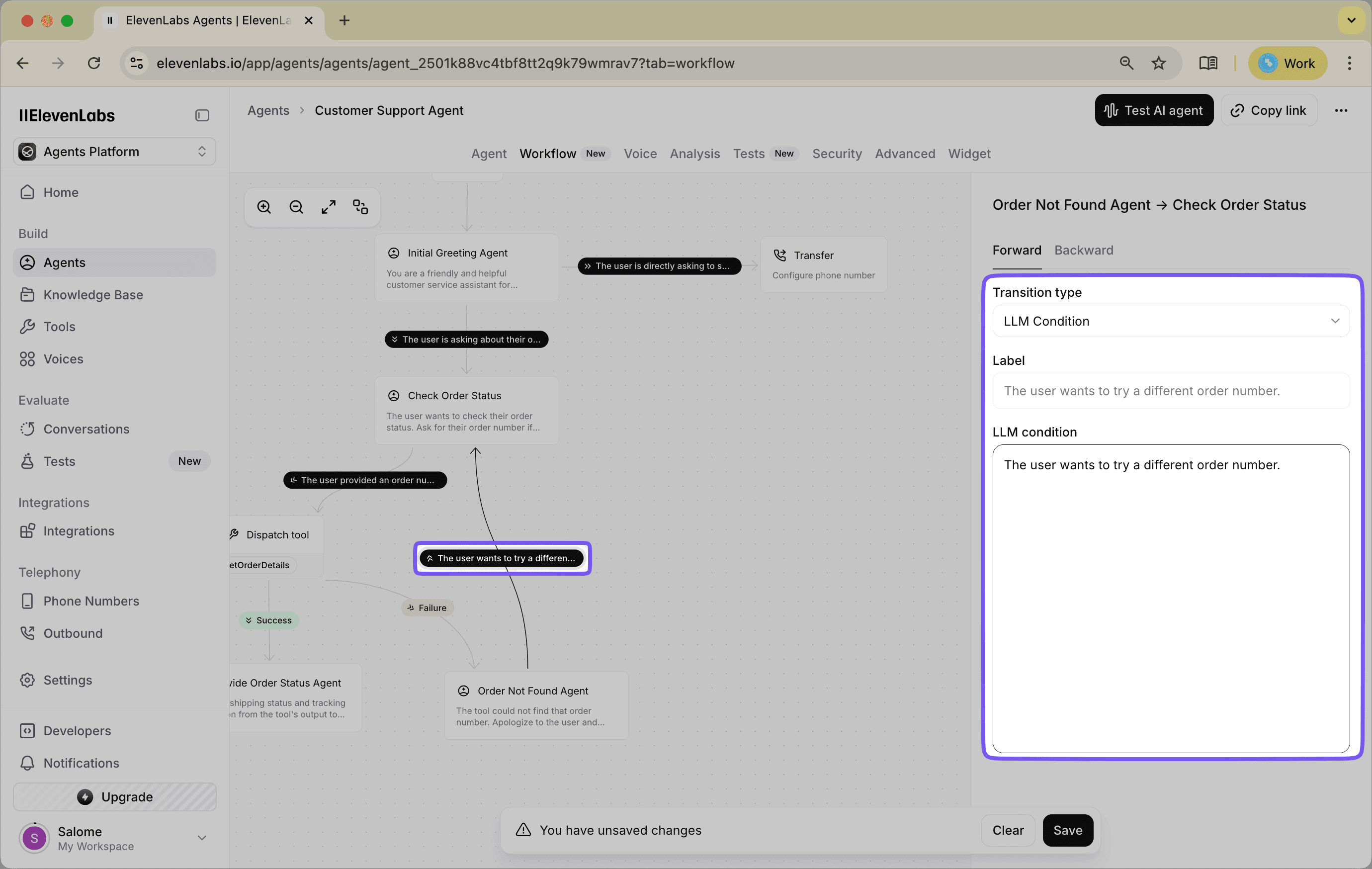

1. Create the "Try Again" Loop If the Get Order Details Tool fails, the Order Not Found Agent gives the user two choices. We will now create the path for the user who wants to try again.

- Create a path from the

Order Not Found Agentnode back to theCollect Order Number Agentnode. This will form a loop in your workflow. - Click the "Configure Condition" button on this new path.

- Set the Transition type to

LLM Condition. - For the LLM Condition, describe the user's intent to retry.

- LLM Condition Example:

"The user wants to try a different order number."

- LLM Condition Example:

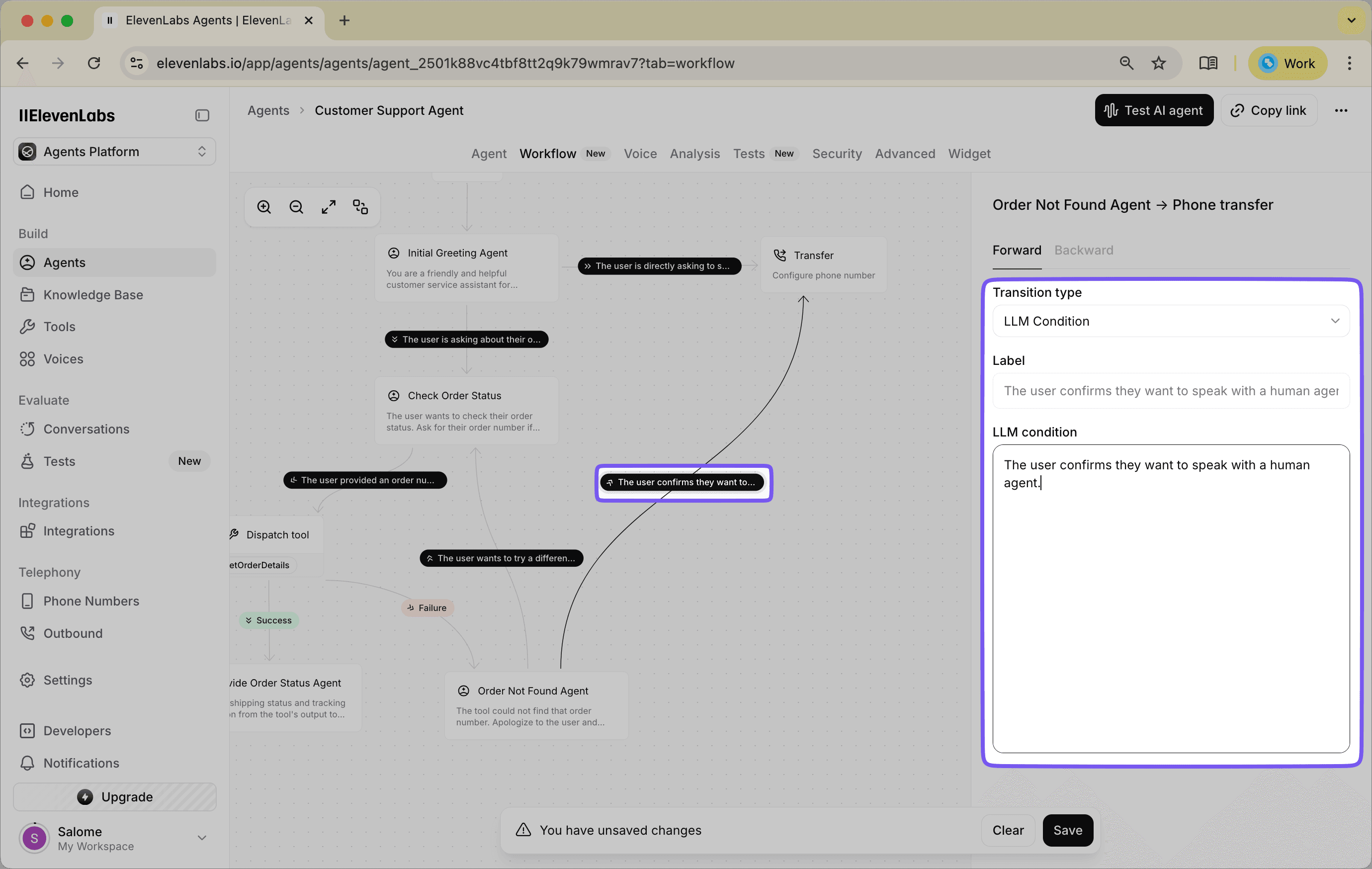

2. Establish the Human Handoff Path The other choice for the user is to speak to an agent. Let's connect that path.

- Create a path from the

Order Not Found Agentnode to theTransfer to Live Agentnode you created earlier. - Click "Configure Condition" and set the Transition type to

LLM Condition. - Define the condition for escalating the call.

- LLM Condition Example:

"The user confirms they want to speak with a human agent."

- LLM Condition Example:

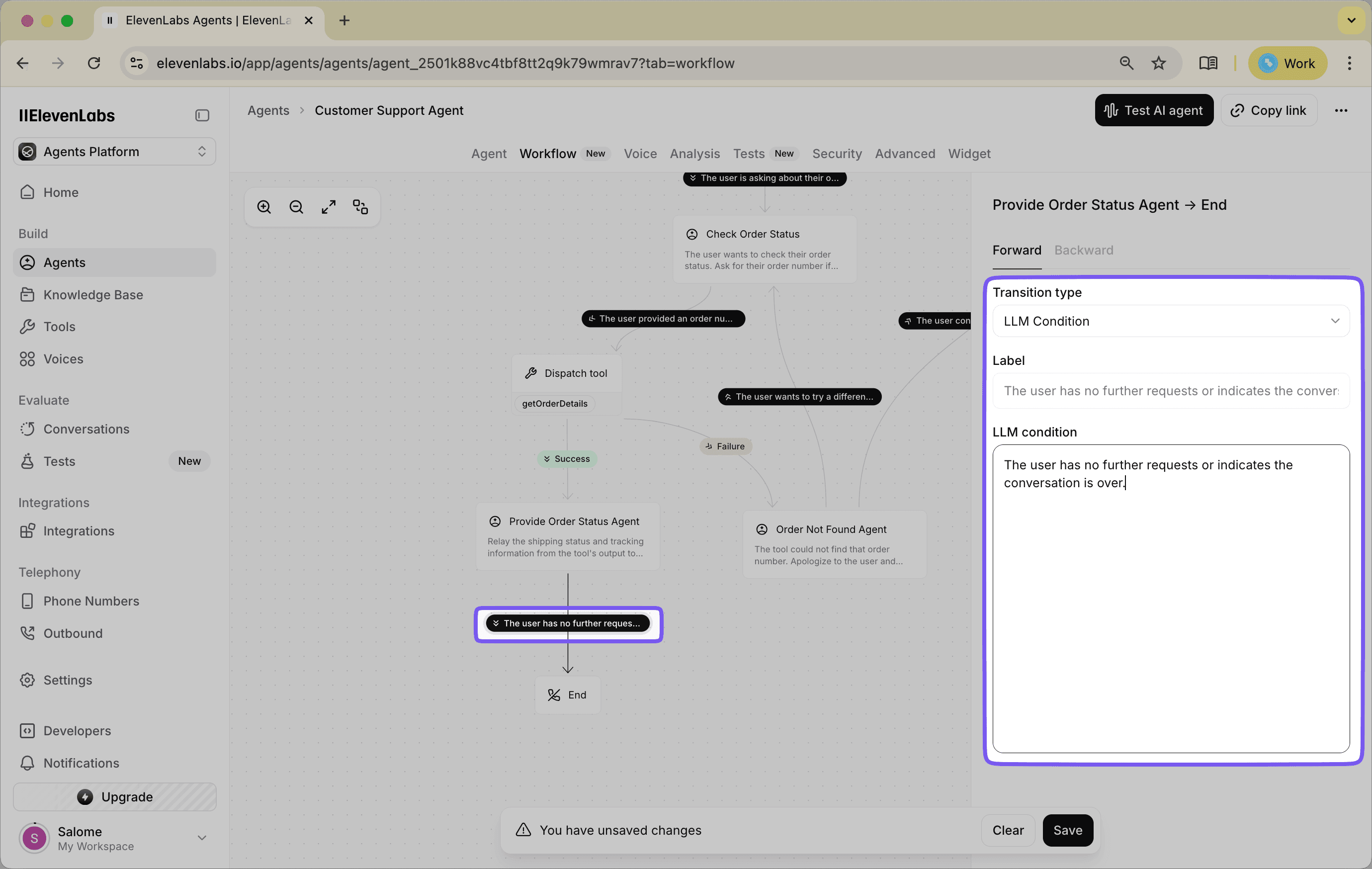

3. Define the Successful Conversation Endpoint After the agent successfully provides an order status, the conversation should end if the user is satisfied.

- Connect the

Provide Order Status Agentnode to an End Node. - Click "Configure Condition" on this final path.

- Set the Transition type to

LLM Condition. - Write a condition that checks if the user's query has been resolved.

- LLM Condition Example:

"The user has no further requests or indicates the conversation is over."

- LLM Condition Example:

This practical example demonstrates how different nodes and conditional paths work together to handle a common customer service scenario. While this covers the essential structure, building a truly effective agent involves additional strategies for making them more efficient, modular, and capable of handling unexpected issues.

Advanced Strategies and Best Practices

Building a functional workflow is the first step. Creating one that is efficient, resilient, and cost-effective requires some additional strategies.

Design for Modularity and Reusability Instead of building one massive workflow for all possible interactions, it is better to create smaller, specialized agents. You can design a primary "Coordinator" agent whose main job is to understand the user's initial intent and then use Agent Transfer Nodes to pass the conversation to a specialized agent. For example, you could have separate, highly-focused agents for Sales, Technical Support, and Account Management. This approach not only keeps your main workflow clean but also allows you to reuse these specialist agents across different applications or platforms.

Implement Comprehensive Error Handling A user's journey will not always follow the "happy path." A tool might fail, an API might be down, or a user might provide invalid information. It is important to build explicit paths for these scenarios.

- Retry Loops: For transient errors, like a temporary network issue, you can create a loop in your workflow. The "On Failure" edge of a Dispatch Tool Node can lead back to the same node to attempt the action again, perhaps after a short delay or asking the user to confirm their details.

- Alternative Solutions: If a tool consistently fails, provide an alternative. If an order number lookup fails, the next step could be a Subagent that offers to search by the user's email address or phone number instead.

- Proactive Escalation: Design workflows to recognize when a user is getting frustrated. If a user ends up in the same error loop more than twice, automatically route them to a Transfer to Number Node to connect with a human agent.

Optimize for Cost and Performance Not all tasks require the most powerful large language model. You can make your agent more efficient by using different models at different stages.

- Initial Triage: Use a fast and inexpensive model in your initial Subagent to perform simple intent recognition. The goal here is speed and efficiency, not deep reasoning.

- Complex Reasoning: When the workflow reaches a point that requires detailed analysis or content generation, switch to a more powerful and capable model using a new Subagent Node. This ensures you are only using the more expensive resources when absolutely necessary.

Maintain Context Explicitly The system automatically carries the conversation history through the workflow. You can create a more natural-sounding interaction by explicitly referencing previously gathered information in your Subagent prompts. For instance, if a user provides their name in an early step, a later prompt could say, "Alright, [User Name], I'm now checking the details for that order." This confirms to the user that the agent is following along and remembers the details of the conversation.

The Competitive Landscape: Agent Workflows vs. Other Platforms

ElevenLabs Agent Workflows enter a market with several established methods for building conversational AI. The primary differences lie in the interface, underlying complexity, and the main focus of the platform.

Visual Workflow Builders vs. Code-First Frameworks

- Code-First Frameworks (e.g., LangChain): These are developer libraries that offer maximum control and flexibility. They require knowledge of programming (like Python) to connect language models, tools, and data. While highly customizable, this approach has a steeper learning curve and can slow down initial development.

- ElevenLabs Agent Workflows: This platform provides a visual, no-code interface. It abstracts away the complex code, allowing users to design and manage agent behavior with a drag-and-drop editor. This makes it more accessible for teams with mixed technical skills and can speed up prototyping and deployment.

Comparison to Intent-Based NLU Platforms

- Platforms like Google Dialogflow: These are powerful tools for creating structured conversational experiences, often for chatbots and contact centers. They use a state machine approach based on user intents and entities to guide the conversation. While effective, some users note a steep learning curve for more complex designs.

- ElevenLabs Agent Workflows: While also visual, the design of Agent Workflows is heavily focused on creating dynamic, low-latency voice interactions. A key distinction is the ability to change the agent's voice, personality (via prompt), and even the underlying language model at different nodes within a single conversation flow. This allows for more adaptive and context-aware voice agents.

Focus on Voice and Real-time Interaction

- Many platforms can integrate text-to-speech as a feature.

- ElevenLabs, however, is built with a voice-first approach. The entire workflow system is designed to support the creation of natural, human-sounding voice agents that can handle intricate conversational paths in real-time. This includes features for seamless handoffs and integrations with telephony systems.

Limitations and Considerations

Agent Workflows, while offering a visual paradigm, require careful management of potential challenges during design and deployment.

The "Visual Spaghetti" Problem

- The Challenge: For simple agents, the visual map is clean and intuitive. However, as you account for every possible user query, error condition, and conversational detour, the workflow can grow into a complex web of nodes and crisscrossing lines.

- The Impact: A highly dense visual map can become difficult to read, debug, and modify. Onboarding a new team member to understand a sprawling workflow can be just as challenging as having them learn a complex codebase.

Chasing Ghosts When Debugging

- The Challenge: When a conversation goes wrong, the issue isn't always a single broken node. The problem could be more subtle.

- The Impact: Debugging often involves re-tracing the conversational path step-by-step. You have to ask questions like: Was the correct context passed from the previous node? Did a tool return data in an unexpected format? Did the user's phrasing trigger an edge case you didn't anticipate? Finding the root cause can feel like chasing a ghost through the connections in your map.

The Walled Garden Effect

- The Challenge: The speed and convenience of a no-code visual builder come with a trade-off: platform lock-in.

- The Impact: The logic, structure, and configurations you create are native to the ElevenLabs environment. You cannot easily export a complex workflow and run it on a different platform or with a code-based framework. If your project's needs change drastically, migrating away from the platform would mean rebuilding the agent's logic from the ground up.

Conclusion: The Future of AI Conversations is Visual and Dynamic

The development of conversational AI is moving away from single, monolithic scripts and toward more modular, manageable systems. ElevenLabs Agent Workflows represent a major part of this evolution. They provide a visual canvas for designing, testing, and deploying complex, multi-turn conversational agents.

This approach changes how sophisticated agents are constructed:

- Logic becomes explicit. Instead of being buried in code or a massive prompt, the agent's decision-making process is mapped out visually, making it easier to understand and audit.

- Complexity is managed. By breaking down large tasks into smaller, specialized Subagents and tools, developers can build more capable systems without creating an unmanageable single agent.

- Flexibility is built-in. The ability to swap language models, conditionally dispatch tools, and transfer between different AI and human agents provides the components needed for truly adaptive interactions.

The movement is toward a future where the construction of AI conversations is less about pure programming and more about thoughtful design. Visual platforms like Agent Workflows make these advanced capabilities accessible to a wider audience, enabling the creation of voice and text agents that can navigate real-world scenarios with greater precision and control.

Related Articles

DOM Downsampling for LLM-Based Web Agents

We propose D2Snap – a first-of-its-kind downsampling algorithm for DOMs. D2Snap can be used as a pre-processing technique for DOM snapshots to optimise web agency context quality and token costs.

A Gentle Introduction to AI Agents for the Web

LLMs only recently enabled serviceable web agents: autonomous systems that browse web on behalf of a human. Get started with fundamental methodology, key design challenges, and technological opportunities.